SmartBody includes functionality to retarget or adapt motion to from one character to another. This is an important feature to allow various characters to share animation originally constructed for one character with another.

How Retargeting Works

Motion retargeting is to transform a motion that is originally created for a character to apply it on a new character. The trivial way of doing this is to directly apply the motion on the new character, which means we simply copy over the joint angles in the motion to the new character. However, there are several problems with this trivial approach :

- The joint names between two characters may be different. For example, a skeleton may have a joint name "r_wrist", which may be corresponding to the joint name "RightHand" in another skeleton. Correctly matching these joint pairs are required to copy joint angles in a motion to the proper joints.

- Two characters may have different initial poses. For example, some characters use T-pose as their initial pose while some use A-pose. Thus the same joint rotation will have different result given different initial poses.

- The corresponding joints between two characters may have different local rotation frame. For example, "rotate the shoulder joint about X-axis for 90 degrees" may move the arm up for one character, but move the arm forward for another character.

- There may be motion artifacts such as foot sliding on the new character since two characters have different proportions and scale.

SmartBody retargeting system address these issues to create a new motion that is suitable for the new character.

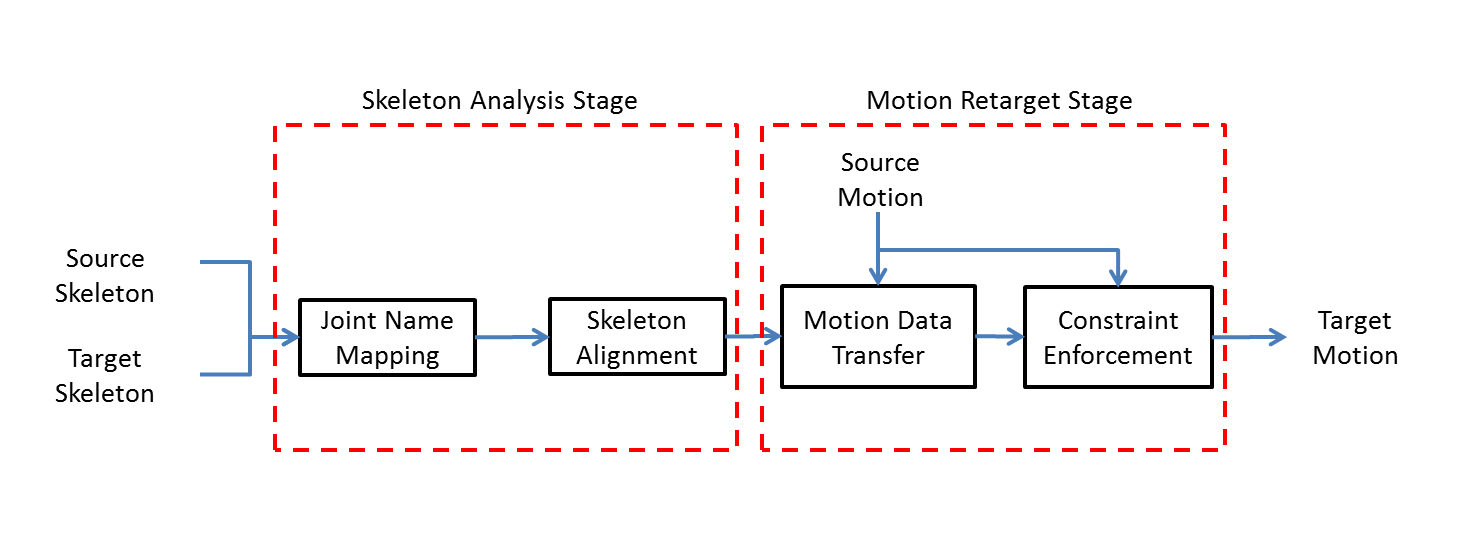

The above diagram summarizes the steps for retargeting. The system accepts a source skeleton, its corresponding source motion, and a new target skeleton as the input. Then it produces a new target motion that is suitable for the target skeleton by converting the source motion. This conversion is based on the difference between source skeleton and target skeleton.

Joint Name Mapping

Joint name mapping address the issue that two characters may have different joint names. It relies on the automatic joint name guessing to map joint names from both characters to the standard SmartBody joint names. More details can be found in Automatic Skeleton Mapping. Note that our name guessing method is a heuristic algorithm using the skeleton topology and conventional joint names to infer the joint name. Therefore at times it may produce incorrect mapping results. The user can later modify the guessed mapping with either Python API or GUI.

Skeleton Alignment

Skeleton alignment address the problem that two characters may have different initial poses, and their corresponding joints could have different local rotation frames.

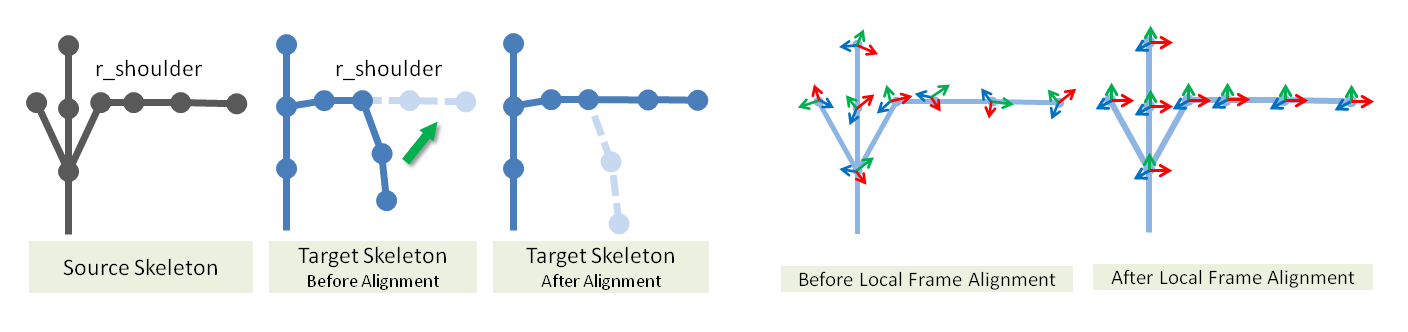

Our method address the first problem by aligning the rest pose between two skeleton. As shown in the image, we will recursively rotate each joint in the target skeleton to match the global bone orientation in the source skeleton. We will record the alignment rotations at each joint and apply them during retargeting to compensate for the initial pose differences.

The second problem is to compensate for different joint local frames. Our method will transform each joint local frame to the canonical frame with X, Y, Z axes point Right, Up, And Forward. This will align the joint local frames at both characters, and these alignment rotations again will be used to compensate the final joint rotation during retargeting.

The detailed formulations for these steps are outside the scope of this manual. We refer interested readers to our paper for more detail : Automating the Transfer of a Generic Set of Behaviors Onto a Virtual Character.

Motion Data Transfer

Once we have all the rotation data from skeleton alignment, a new target motion can be generated for the new character. Given a source motion corresponding to the source skeleton, the system use the alignment rotations for initial pose and local frames to convert the joint rotations from source motion. A new target motion will be generated and can be readily apply on the new target character. For motions without significant leg movements ( ex. gesture motions ), this stage is usually enough to produce reasonable motion retargeting. However, for motions with leg movements ( ex. locomotion ), a constraint enforcement stage may be used to remove foot sliding artifacts.

Constraint Enforcement

Since two character may have different proportion and sizes, the same motion may look different between them. For gesture motions, this is usually acceptable. However, for motions that require more precision such as reaching and locomotion, this difference may create less accurate or lower quality animations with foot sliding. Therefore in this stage, IK is used as a post-processing to adjust the arms or legs to hit the correct trajectory in retargeted motion. The system takes the desired joints' positional trajectories ( ex. ankle joints for locomtoion ), and scale them according to the size difference between two characters. These new trajectories are then used as IK target to adjust the retargeted motions. This post-processing will produce a new motion with its important joints following the correct trajectory like original motion.