SmartBody characters are able to change the expression on their faces and perform lip syncing with prerecorded utterances or utterances generated from a text-to-speech engine.SmartBody uses Facial Action Units (FACS) to generate facial expressions, and uses a procedurally-driven lip syncing algorithm to generate lip synching. In addition, lip synching can be customized for prerecorded audio based on the results, for example, of 3rd party facial animation software.

The ability of a character to perform different facial expressions is determined by pose examples that are are supplied for each character. Poses can be used for FACS units in order to generate facial expressions, or for visemes which can be used to generate parts of a lip syncing sequence.

SmartBody can generate facial expressions for either joint-driven faces, or shape-driven faces.SmartBody will generate the motion for joint-driven face configurations. For shape-driven face configurations, SmartBody will determine the activation values that will be interpreted by a renderer that manages the face shapes.

SmartBody uses a hierarchical scheme for applying animation on characters. Thus, more general motions, such as full-body animation, are applied first. Then, more specific animation, such as facial animation, is applied to the character and overrides any motion that previously controlled the face. Thus, SmartBody's facial controller will dictate the motion that appears on the character's face, regardless of any previously-applied body motion.

Please note that there is no specific requirements for the topology or connectivity of the face; it can contain as many or as few as desired. In addition, complex facial animations can be used in place of the individual FACS units in order to simplify the facial animation definition. For example, a typical smile expression might comprise several FACS units simultaneously, but you might want to create a single animation pose to express a particular kind of smile that would be difficult to generate by using several component FACS units. To do so, you could define such a facial expression with a motion, then attach that motion to a FACS unit, which could then be triggered by a BML command. For example, a BML command to create a smile via individual FACS units might look like this:

<face type="FACS" au="6" amount=".5"/><face type="FACS" au="12" amount=".5"/>

whereas a BML command to trigger the complex facial expression might look like this, assuming that FACS unit 800 has been defined by such an expression:

<face type="FACS" au="800" amount=".5"/>

Details

Each character requires a Face Definition, which includes both FACS units as well as visemes. To create a Face Definition for a character, use the following commands in Python:

face = scene.createFaceDefinition("myface")

Next, a Face Definition requires that a neutral expression is defined, as follows:

face.setFaceNeutral(motion)

where motion is the name of the animation that describes a neutral expression. Note that the neutral expression is a motion file that contains a single pose. In order to add FACS units to the Face Definition, use the following:

face.setAU(num, side, motion)

where num is the number of the FACS unit, side is "LEFT", "RIGHT" or "BOTH", and motion is the name of the animation to be used for that FACS unit. Note that the FACS motion is a motion file that contains a single pose. For shape-driven faces, use an empty string for the motion (""). Note that LEFT or RIGHT side animations are not required, but will be used if a BML command is issued that requires this. Please note that any number of FACS can be used. The only FACS that are required for other purposes are FACS unit 45 (for blinking and softeyes). If you wanted to define an arbitrary facial expression, you could use an previously unused num (say, num = 800) which could then be triggered via BML.

To define a set of visemes which are used to drive the lip animation during character speech, use the following:

face.setViseme(viseme, motion)

where viseme is the name of the viseme and motion is the name of the animation that defines that viseme. Note that the viseme motion is a motion file that contains a single pose. For shape-drive faces, use an empty string for the motion (""). Please note that the name of visemes will vary according to the text-to-speech or prerecorded audio component that is connected to SmartBody.

Lip Syncing text-to-speech or prerecorded audio

SmartBody can use two different lip syncing models that require different sets of visemes a high quality, phone bigram based method, and a low-quality pose-based method.

High Quality Lip Syncing Using the Phone Bigram Method

The recommended method is to use SmartBody's phone bigram based lip syncing scheme. By default, the Speech Relays (Microsoft, Festival, CereProc, etc.) use this method. In this method, lip syncing is accomplished by mapping phonemes (word sounds) to a common set of phonemes, and then combined with a set of artist-created animations for each pair of phonemes.The results are well synchronized with the audio.

The recommended use is to use the following set of visemes (which are compatible with the FaceFx software) and can be used both for text-to-speech and for prerecorded audio:

open, W, ShCh, PBM, fv, wide, tBack, tRoof, tTeeth

| Pose | Description |

|---|---|

| open |  |

| W |  |

| ShCh |  |

| PBM |  |

| Fv |  |

| wide |  |

| tBack |  |

| tRoof |  |

| tTeeth |  |

To enable the phone bigram based lip syncing, load the included phone bigram animations once:

scene.run("init-diphoneDefault.py")

this will create the default lip sync set that can be used by any character. Then set the following attributes on your character:

mycharacter = scene.getCharacter(name)

mycharacter.setBoolAttribute("usePhoneBigram", True)

mycharacter.setBoolAttribute("lipSyncSplineCurve", True)

mycharacter.setDoubleAttribute("lipSyncSmoothWindow", .2)

mycharacter.setStringAttribute("lipSyncSetName", "default")

Offline setting up the phone bigram curves

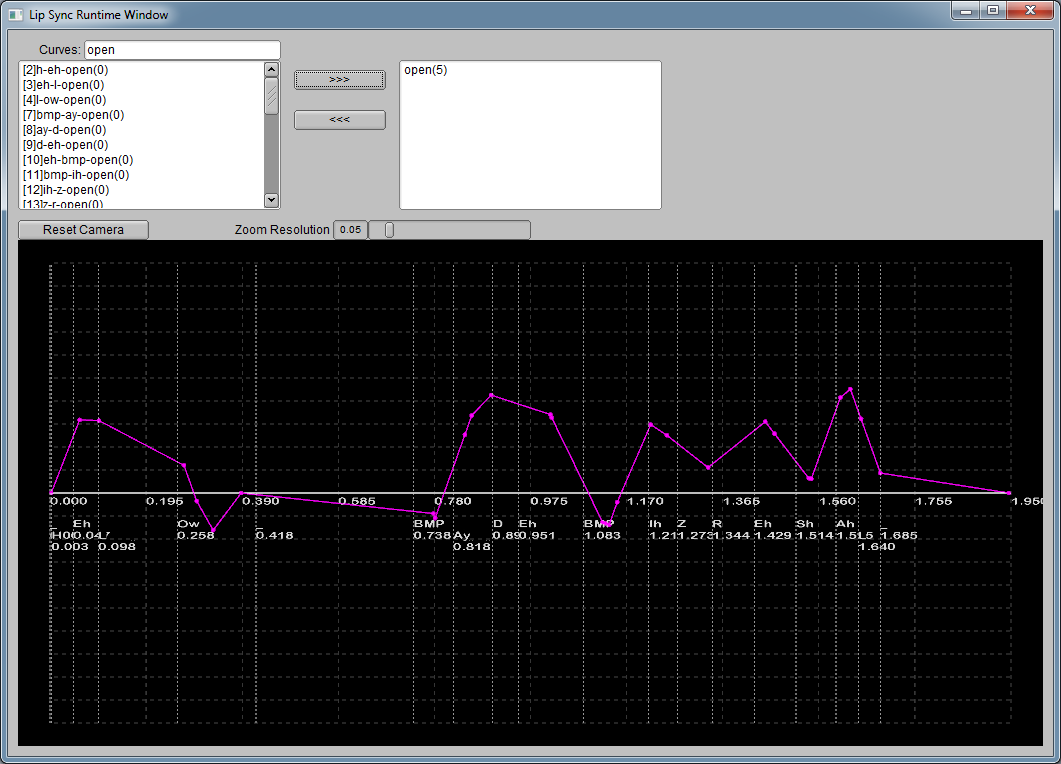

Artist can use lip sync viewer to mark up phone bigram curves and save them to .py file.

Run time operations (behind the scene)

operation 0

| operation 1

| operation 2

| operation 3

| operation 4

| operation 5

|

|---|

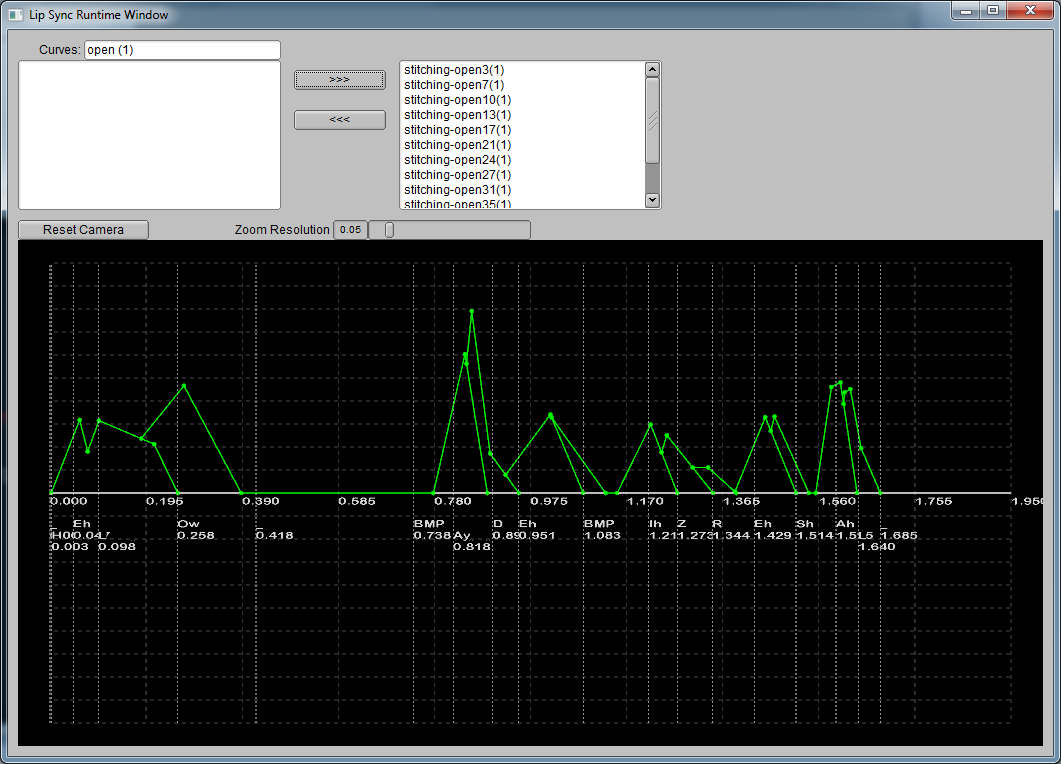

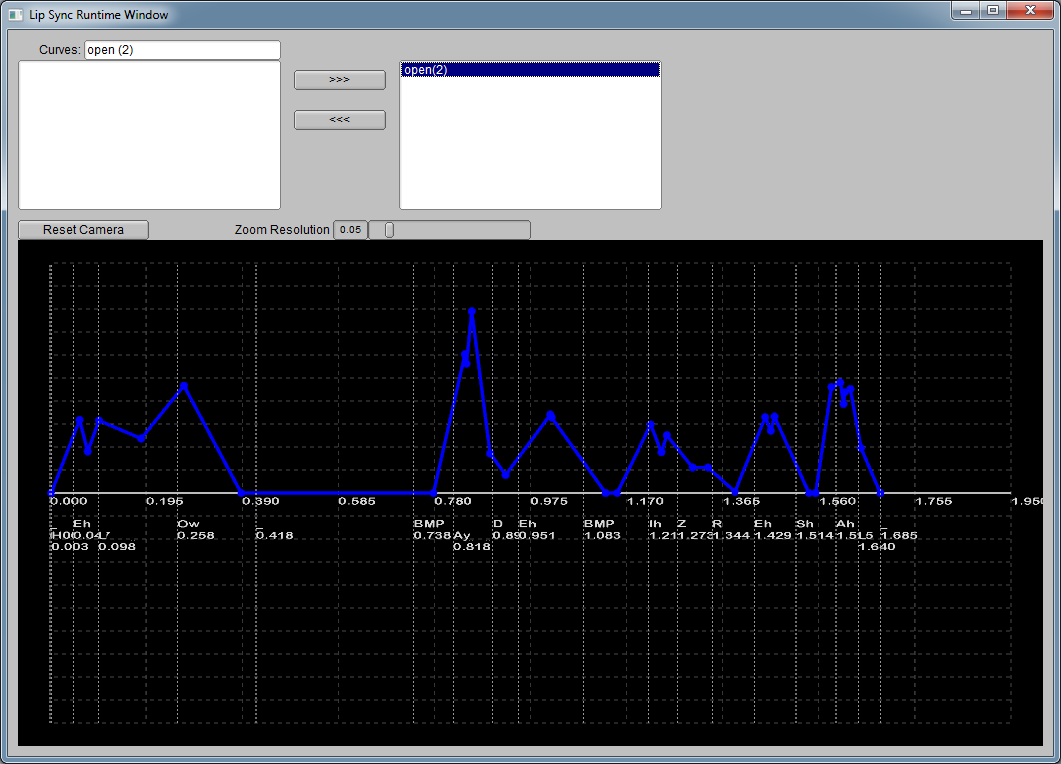

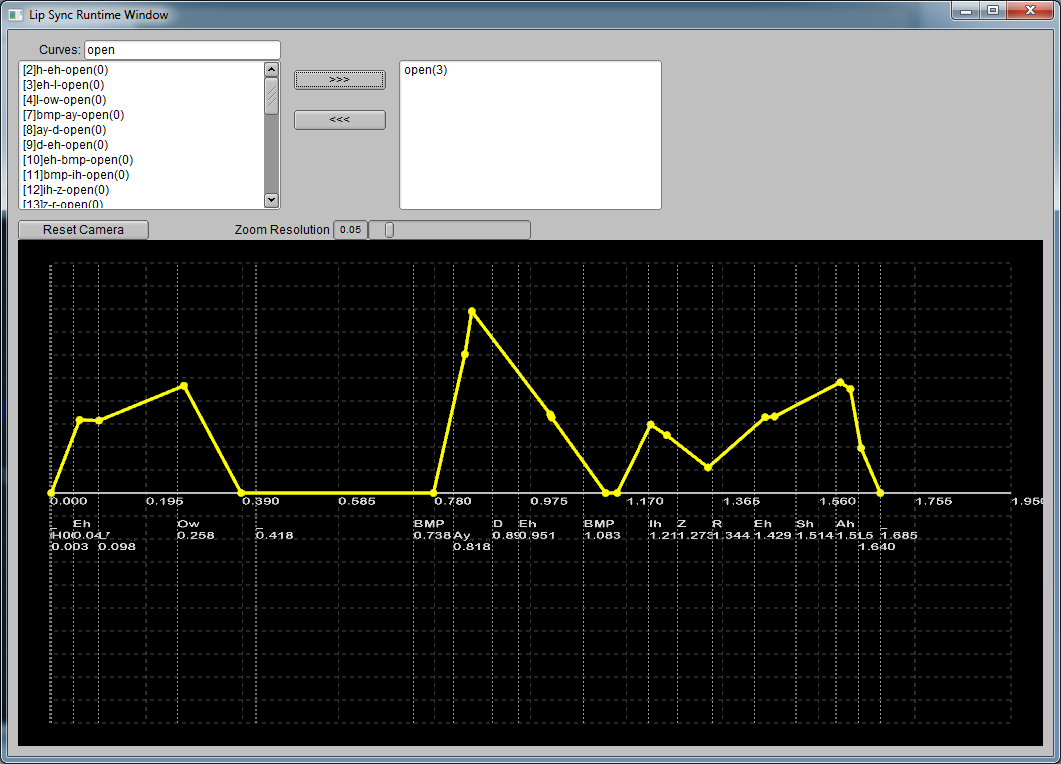

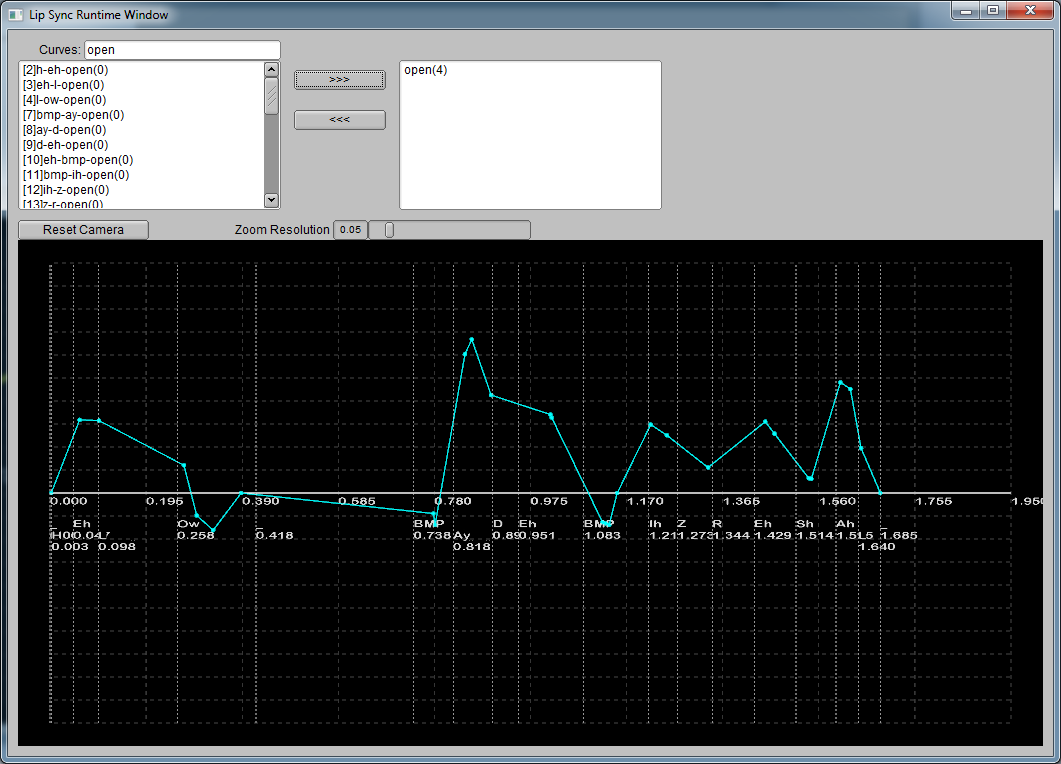

When you executes a speech bml command, the following operations are actually happening behind the stage (you can see more details from Lip Sync RunTime Viewer Lip Sync Viewer)

- (0): layout all phone bigram curves onto the timeline.

This step will take all the artist generated phone bigram curves associated with phoneme pairs and time warp them to fit into the time span of corresponding phoneme pairs.

- (1): stitch the curves

This step stitches the overlapping curves for the same face pose.

- (2): extract the out-lope of the curve

This step take the output of step 1 and extract the out-lope of the curve, generating a complete curve for each face pose.

- (3): smooth curve given window size

This step perform a smoothing pass over each curve using a user-specified window to scan through temporal domain and find local maximas.

- (4): constrain curves given rules defined for different facial poses

Since facial poses are activated based on parametric values, any two poses could be arbitrarily combined together to form a new face shape. However, certain poses, when activated simultaneously, would produce unnatural results. For example, the face pose for 'open' should not be activated along with the pose for 'PBM'. Since one pose is open mouth and the other is close mouth, combining them together may cause interference and cancel each other. This can cause important information being eliminated from the resulting facial motion. A threshold is defined how much two poses may interfere with each other.

- (5): apply speed limit to curve slopes

To produce natural lip syncing animation, a character should only move his lips with a reasonable speed. However, the animator may create a bigram curve with high slope and the time warping could compress the curve to be excessively sharp. This step cap the parametric speed of piece wise linear curve by a threshold defined by attribute.

Attributes used for phone bigram based method

- usePhoneBigram: Bool Attribute. Toggle whether to use phone bigram based method

- lipSyncConstraint: Bool Attribute. Global toggle that set constrains on face poses.

- constrainFV: Bool Attribute. Whether to constrain face pose FV.

- constrainW: Bool Attribute. Whether to constrain face pose W.

- constrainWide: Bool Attribute. Whether to constrain face pose Wide.

- constrainPBM: Bool Attribute. Whether to constrain face pose PBM.

- constrainShCh: Bool Attribute. Whether to constrain face pose ShCh.

- openConstraintByPBM: Double Attribute. Open facial shape constraint by PBM facial shape.

- wideConstraintByPBM: Double Attribute. Wide facial shape constraint by PBM facial shape.

- shchConstraintByPBM: Double Attribute. Wide facial shape constraint by PBM facial shape.

- openConstraintByFV: Double Attribute. Open facial shape constraint by FV facial shape.

- wideConstraintByFV: Double Attribute. Wide facial shape constraint by FV facial shape.

- openConstraintByShCh: Double Attribute. Open facial shape constraint by ShCh facial shape.

- openConstraintByW: Double Attribute. Open facial shape constraint by W facial shape.

- openConstraintByWide: Double Attribute. Open facial shape constraint by Wide facial shape.

- lipSyncScale: Double Attribute. Scale factor for lip sync curves.

- lipSyncSetName: String Attribute. Name of the lip sync set to be used when using phone bigram method.

- lipSyncSplineCurve: Bool Attribute. Toggle between spline and linear curve.

- lipSyncSmoothWindow: Double Attribute. Smooth window size. If it's less than 0, don't do smooth.

- lipSyncSpeedLimit: Double Attribute. Speed Limit of facial shape movement.

Face Definition

Once the FACS units and visemes have been added to a Face Definition, the Face Definition should be attached to a character as follows:

mycharacter = scene.getCharacter(name) mycharacter.setFaceDefinition(face)

where name is the name of the character. Note that the same Face Definition can be used for multiple characters, but the final animation will not give the same results if the faces are modeled differently, or if the faces contain a different number of joints.

The following is an example of the instructions in Python that are used to define a joint-driven face:

face = scene.createFaceDefinition("myface")

face.setFaceNeutral("face_neutral")

face.setAU(1, "LEFT", "fac_1L_inner_brow_raiser")

face.setAU(1, "RIGHT", "fac_1R_inner_brow_raiser")

face.setAU(2, "LEFT", "fac_2L_outer_brow_raiser")

face.setAU(2, "RIGHT", "fac_2R_outer_brow_raiser")

face.setAU(4, "LEFT", "fac_4L_brow_lowerer")

face.setAU(4, "RIGHT", "fac_4R_brow_lowerer")

face.setAU(5, "BOTH", "fac_5_upper_lid_raiser")

face.setAU(6, "BOTH", "fac_6_cheek_raiser")

face.setAU(7, "BOTH", "fac_7_lid_tightener")

face.setAU(9, "BOTH", "fac_9_nose_wrinkler")

face.setAU(10, "BOTH", "fac_10_upper_lip_raiser")

face.setAU(12, "BOTH", "fac_12_lip_corner_puller")

face.setAU(15, "BOTH", "fac_15_lip_corner_depressor")

face.setAU(20, "BOTH", "fac_20_lip_stretcher")

face.setAU(23, "BOTH", "fac_23_lip_tightener")

face.setAU(25, "BOTH", "fac_25_lips_part")

face.setAU(26, "BOTH", "fac_26_jaw_drop")

face.setAU(27, "BOTH", "fac_27_mouth_stretch")

face.setAU(38, "BOTH", "fac_38_nostril_dilator")

face.setAU(39, "BOTH", "fac_39_nostril_compressor")

face.setAU(45, "LEFT", "fac_45L_blink")

face.setAU(45, "RIGHT", "fac_45R_blink")

# higher quality, diphone-based lip syncing

face.setViseme("open", "viseme_open")

face.setViseme("W", "viseme_W")

face.setViseme("ShCh", "viseme_ShCh")

face.setViseme("PBM", "viseme_PBM")

face.setViseme("fv", "viseme_fv")

face.setViseme("wide", "viseme_wide")

face.setViseme("tBack", "viseme_tBack")

face.setViseme("tRoof", "viseme_tRoof")

face.setViseme("tTeeth", "viseme_tTeeth")

# lower quality, simple viseme lip syncing

'''

face.setViseme("Ao", "viseme_ao")

face.setViseme("D", "viseme_d")

face.setViseme("EE", "viseme_ee")

face.setViseme("Er", "viseme_er")

face.setViseme("f", "viseme_f")

face.setViseme("j", "viseme_j")

face.setViseme("KG", "viseme_kg")

face.setViseme("Ih", "viseme_ih")

face.setViseme("NG", "viseme_ng")

face.setViseme("oh", "viseme_oh")

face.setViseme("OO", "viseme_oo")

face.setViseme("R", "viseme_r")

face.setViseme("Th", "viseme_th")

face.setViseme("Z", "viseme_z")

face.setViseme("BMP", "viseme_bmp")

face.setViseme("blink", "fac_45_blink")

'''

mycharacter = scene.getCharacter(name)

mycharacter.setFaceDefinition(face)