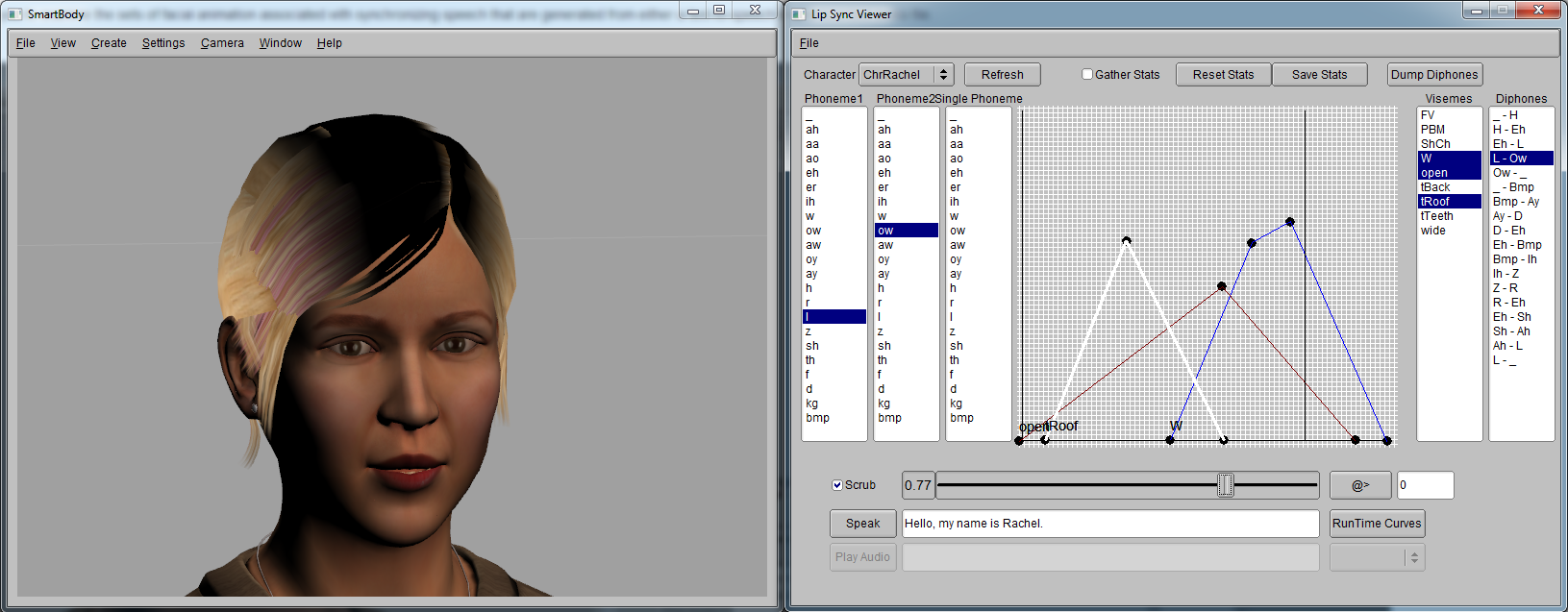

The Lip Sync Viewer allows you to examine the sets of facial animation associated with synchronizing speech that are generated from either a text-to-speech or prerecorded audio file.

The curves show the facial animation associated with an adjacent set of phonemes, and the facial poses that are used to generate that motion. In the example above, the curves are activating the W, open and tRoof shapes in order to generate motion for the phonemes L-Ow (like you would say the word 'Low'). The phoneme pairs used for the utterance (in this case, "Hello, my name is Rachel") are displayed on the far right. In this case, the character is using a text-to-speech (TTS) engine. If the character was using a prerecorded voice, then the list of audio that could be played would be displayed in the dropdown box on the bottom right and the 'Play Audio' button would be enabled. Any adjacent phoneme pairs can be scrubbed to show the motion, as well as edited.The 'Dump Diphones' button will save the modified data to a .py file. Runtime curves will be found out in Lip Sync Runtime Window by clicking "RunTime Curves" Button.

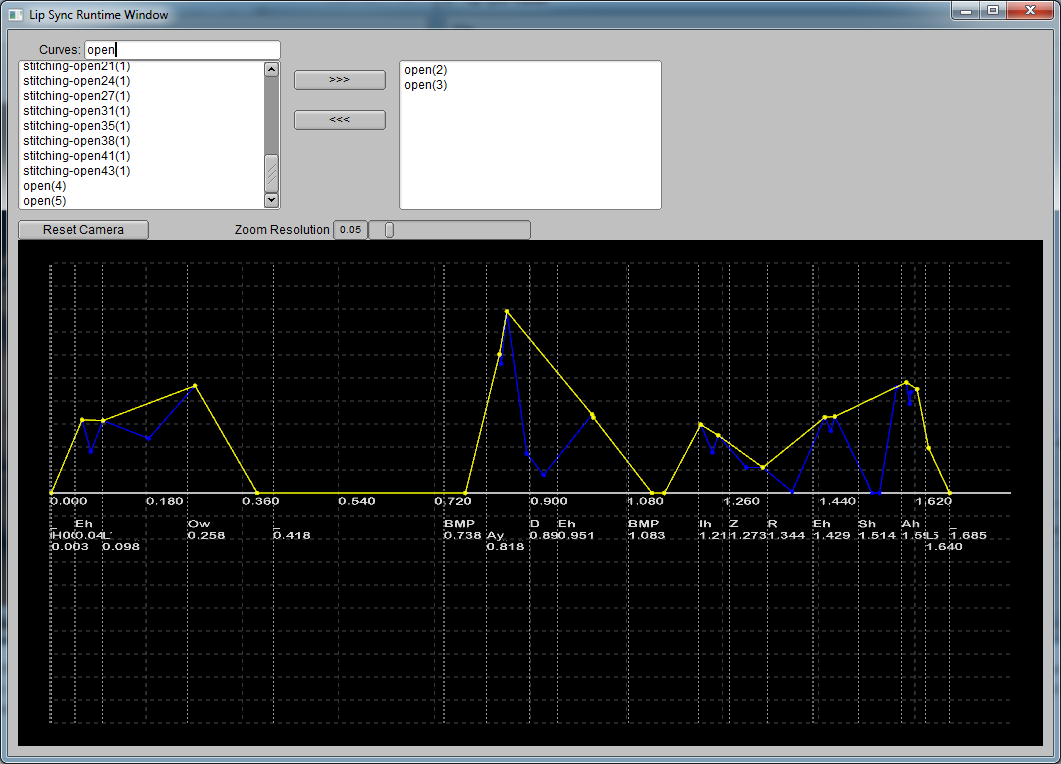

The window above shows the phoneme schedules as well as each operation done to the animation curves. For example, open(2) means it's the output after second operation done to animation curve for facial pose "open". You can zoom in zoom out to better spectate the curves, also filter function is provided to find the specific curves.

More details about this process can be found on the section Configuring Facial Animations and Lip Syncing.